2.3.1.2.3. ON Core proxy RADIUS

2.3.1.2.3.1. Definition (What is it?)

The use of a proxy RADIUS may be due to the following cases:

Environments with a large number of authentication requests where it will be necessary to balance the load between the different ON Worker nodes.

Improve the HA in case of failure of any of the ON Worker authentication nodes.

Multiple Active Directory domains, without any relationship between them. You need to use a proxy RADIUS as a frontend to dispatch request to backends connected to each Active Directory.

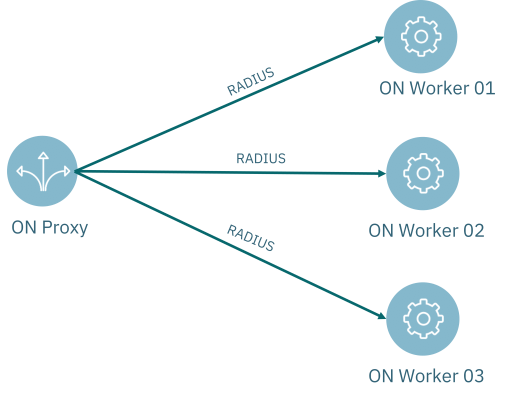

Architecture Example

2.3.1.2.3.2. Proxy Server Configuration

Note

Remember to perform the Basic Configuration before proceeding.

SSH into the ON Core node that will be configured as a ON Proxy

This node will be the dispatcher for all radius requests, the backends will execute the RADIUS Auth and the evaluation policy (Poleval).

Change the /etc/raddb/sites-available/default file by /etc/raddb/sites-available/default_proxy_opennac

mv /etc/raddb/sites-available/default_proxy_opennac /etc/raddb/sites-available/default

Remove unnecessary radius files

rm -rf /etc/raddb/mods-enabled/{eap_opennac,sql_opennac,inner-eap_opennac}

rm -rf /etc/raddb/sites-enabled/inner-tunnel

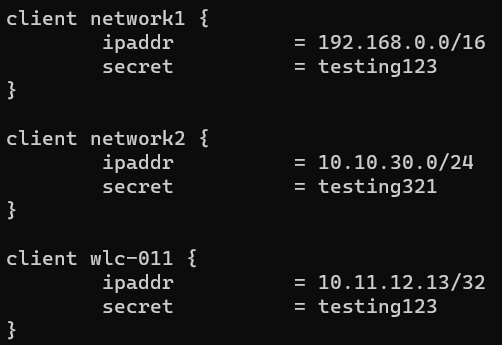

2.3.1.2.3.2.1. Configure the clients.conf

In the clients.conf file, it will be necessary to define the networks or hosts that are going to make requests against the server. These requests will only be accepted if they come from a network or host defined in clients.con and the secret key matches the one defined.

Edit the radius allowed clients:

vim /etc/raddb/clients.conf

Add the proxy node as radius client:

client <Network Name> {

ipaddr = <Network IP>/<Network Mask>

secret = <shared key>

}

Note

<shared key> It’s the string defined on the ON Proxy server in the file vim /etc/raddb/proxy.conf, used to encrypt the packets between the Proxy Servers and Network Devices.

2.3.1.2.3.2.2. Configure the proxy.conf

This file contains a pool of radius servers to manage fail-over and load-balancing, and each pool will contain one or more radius servers.

vim /etc/raddb/proxy.conf

Note

It’s very important configure this file in order.

2.3.1.2.3.2.2.1. Define servers

These are the backend servers we are going to authenticate against (usually ON Workers). There must be an entry for each backend server.

home_server <nameserver 1> {

type = auth+acct

ipaddr = <IP Address backend domain 1>

port = 1812

secret = <shared key>

#optional

require_message_authenticator = yes

response_window = 20

zombie_period = 40

revive_interval = 120

status_check = status-server

check_interval = 30

num_answers_to_alive = 3

max_outstanding = 65536

coa {

irt = 2

mrt = 16

mrc = 5

mrd = 30

}

}

home_server <nameserver 2> {

type = auth+acct

ipaddr = <IP Address backend domain 2>

port = 1812

secret = <shared key>

#optional

require_message_authenticator = yes

response_window = 20

zombie_period = 40

revive_interval = 120

status_check = status-server

check_interval = 30

num_answers_to_alive = 3

max_outstanding = 65536

coa {

irt = 2

mrt = 16

mrc = 5

mrd = 30

}

}

Note

<nameserver> It’s the name that will engage with the pool.

<IP Address backend domain> It’s the IP of the Backend that contains the trust relationship with the domain.

<shared key> It’s the string to encrypt the packets between the Proxy Servers and Backends. Remember this string because will be configured in the Backend servers.

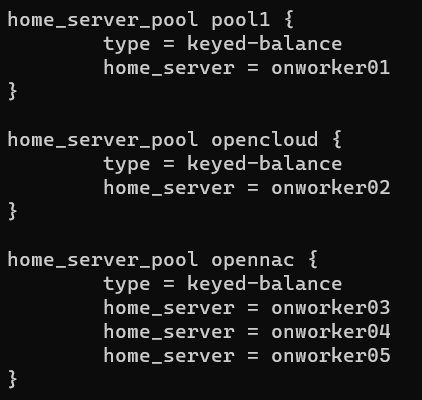

2.3.1.2.3.2.2.2. Create pools

A pool is a group of backend servers (ON Workers) that will attend the requests:

home_server_pool <namepool 1> {

type = keyed-balance

home_server = <nameserver 1>

}

home_server_pool <namepool 2> {

type = keyed-balance

home_server = <nameserver 2>

}

home_server_pool <namepool default> {

type = keyed-balance

home_server = <nameserver 1>

home_server = <nameserver 2>

}

Note

<namepool> It’s the name that will engage with the pool with realm.

<nameserver> It’s the server names assigned in the previous step.

The default pool handles all requests that were not answered by the domains defined before. It also handles requests with no domain associated as a MAB request.

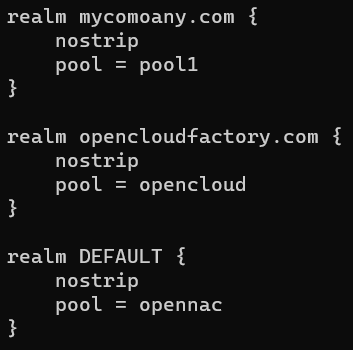

2.3.1.2.3.2.2.3. Define realms

If a user logs in with a defined realm syntax, the “realm” portion is matched against the configuration to determine how the request should be handled.

Note

The realm DEFAULT matches all realms.

realm <FQDN from nameserver 1> {

nostrip

pool = <namepool 1>

}

realm <Short domain name from nameserver 1> {

nostrip

pool = <namepool 1>

}

realm <FQDN from nameserver 2> {

nostrip

pool = <namepool 2>

}

realm <Short domain name from nameserver 2> {

nostrip

pool = <namepool 2>

}

realm DEFAULT {

nostrip

pool = <namepool default>

}

After modifying the proxy.conf file it will be necessary to restart the service to apply the changes:

systemctl restart radiusd

2.3.1.2.3.2.3. Disable unnecessary services

chkconfig named off; /etc/init.d/named stop

chkconfig dhcpd off; /etc/init.d/dhcpd stop

chkconfig gearmand off; /etc/init.d/gearmand stop

chkconfig snmptrapd off; /etc/init.d/snmptrapd stop

chkconfig httpd off; /etc/init.d/httpd stop

chkconfig opennac off; /etc/init.d/opennac stop

chkconfig dhcp-helper-reader off; /etc/init.d/dhcp-helper-reader stop

2.3.1.2.3.2.4. Disable OpenNAC crons

Removing the following files:

rm /etc/cron.d/opennac-scheduler

Additionally, the script for back-up should be modified. that is because a proxy RADIUS deployment doesn’t have internal db so the process to export the db will fail. To prevent this add –avoid-db-dump into backup script as follows:

26 23 * * * root /usr/share/opennac/utils/backup/opennac_backup.sh --avoid-db-dump > /dev/null 2>&1

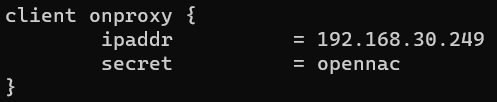

2.3.1.2.3.2.5. ON Worker’s Servers Configuration

Proxy-radius needs access password to request to this server, so in the /etc/raddb/clients.conf file we have to include the connection values

Edit the radius allowed clients:

vim /etc/raddb/clients.conf

Add the proxy node as radius client:

client <Proxy Server Name> {

ipaddr = <Proxy Server IP>

secret = <shared key>

}

Restart radius service:

systemctl restart radiusd

Note

<shared key> It’s the string defined on the ON Proxy server in the file vim /etc/raddb/proxy.conf, used to encrypt the packets between the Proxy Servers and ON Worker (Backends).

2.3.1.2.3.2.6. Balance traffic between ON Core workers

To balance the traffic between the ON Core workers we need to install the haproxy service if it is not installed:

dnf install haproxy

Then we need to go to the configuration file:

vi /etc/haproxy/haproxy.cfg

We need to add the following configuration to the file. In this case, we are considering that we have three workers on the network. You need to adapt the worker entries with the workers you have.

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# https://www.haproxy.org/download/1.8/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

# utilize system-wide crypto-policies

ssl-default-bind-ciphers PROFILE=SYSTEM

ssl-default-server-ciphers PROFILE=SYSTEM

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend gearman_listener

bind localhost:4730

mode tcp

option tcplog

default_backend tcp_backend_gearman

#---------------------------------------------------------------------

# Api workers frontend

#---------------------------------------------------------------------

frontend api-workers

mode http

bind *:80

default_backend http_backend_workers

#---------------------------------------------------------------------

# gearman workers backend

#---------------------------------------------------------------------

backend tcp_backend_gearman

balance roundrobin

mode tcp

server worker1 onworker01:4730 check

server worker2 onworker02:4730 check

server worker3 onworker03:4730 check

#---------------------------------------------------------------------

# Api workers backend

#---------------------------------------------------------------------

backend http_backend_workers

balance roundrobin

mode http

server worker1 onworker01:80 check

server worker2 onworker02:80 check

server worker3 onworker03:80 check

Finally, it is necessary to edit the iptables:

vi /etc/sysconfig/iptables

On ports 80 and 443 We need to accept only petitions from the ON Aggregator component.

# HTTP ports

-A INPUT -s {{ onaggregator_IP }} -p tcp -m state --state NEW -m tcp --dport 80 -j ACCEPT

-A INPUT -s {{ onaggregator_IP }} -p tcp -m state --state NEW -m tcp --dport 443 -j ACCEPT

Then, we need to configure ON Aggregators to send polevals to workers.