3.1.2. Ansible configuration from OpenNAC OVA

This section intends to cover the automatic configuration of the OpenNAC Enterprise servers with an OVA already deployed.

Before configuring the nodes, it is necessary to have the OVAs deployed. For each node, we need an image. Please note that the Cores (Principal, Proxy, and Worker) use the same image, as well as the Analytics and Sensor nodes use the same OVA:

Download images in URL: https://repo-opennac.opencloudfactory.com/ova/

Core Principal → opennac_core_<ONCORE_FULL_VERSION>_img.ova

Core Worker → opennac_core_<ONCORE_FULL_VERSION>_img.ova

Core Proxy → opennac_core_<ONCORE_FULL_VERSION>_img.ova

Analytics → opennac_analytics_<ONNALYTICS_FULL_VERSION>_img.ova

Sensor → opennac_analytics_<ONNALYTICS_FULL_VERSION>_img.ova

Once you have them deployed, we need them to have:

Default SSH access for all images username/password is root/opennac

Default HTTPS access for Principal node username/password is admin/opennac

An accessible IP address and internet connection

A ssh key pair generated from the Core Principal and copied to itself and the other nodes.

Note

The playbooks will be launched from the OpenNAC Enterprise Principal node, as it is the one that has the code.

3.1.2.1. Giving the nodes an IP

Some of the OVAs, like the analytics one, come configured with two network interfaces (eth0 and eth1). We can use the eth0 interface as a management and communication interface between nodes.

Others only have 1 interface by default. Configure them according to your needs.

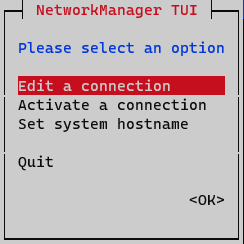

To assign said IP, we execute the graphical network manager:

nmtui

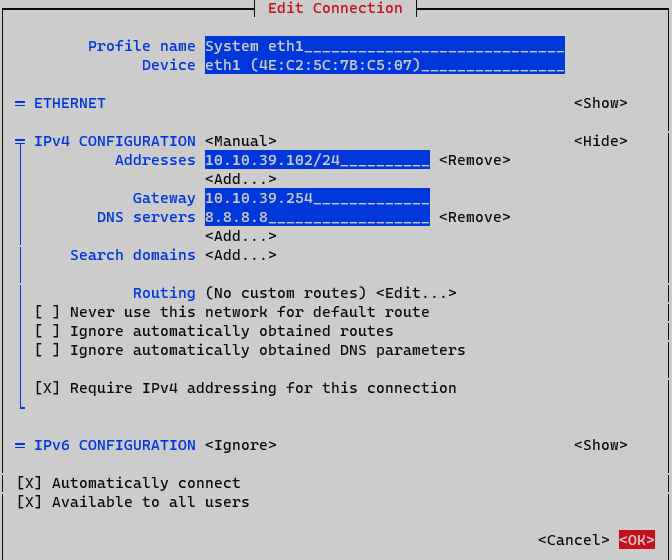

On the initial window, select Edit a connection

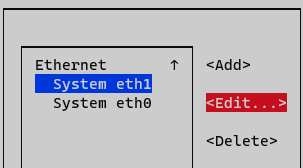

Select the interface and press Edit

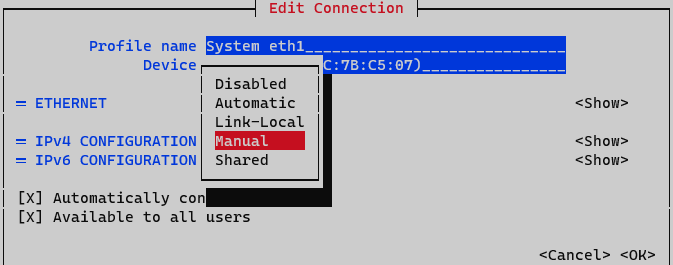

On IPv4 Configuration select Manual. Display IPv4 configuration selecting option <Show>

Addresses: Add node IP address with netmask (<IP>/<MASK>)

Gateway: Add a default gateway

DNS Servers: Add a DNS server (ex. Google)

Set option Require IPv4 addressing for this connection. Finalize by clicking <OK> at the bottom.

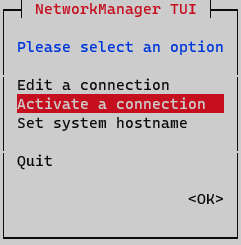

At this point activate and deactivate interface must be done to apply changes. On Network Manager menu, select option Activate a connection.

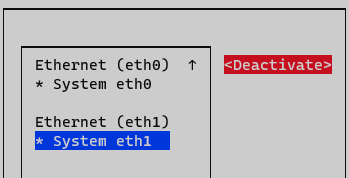

Deactivate and active interface and go back to initial menu.

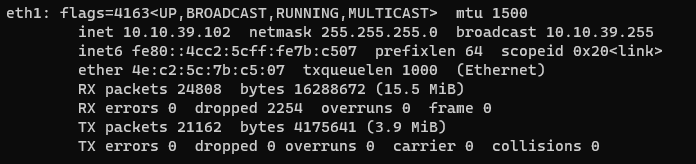

Interface is now configured and can be verified by typing the following command in CLI “ifconfig” o “ip a”

ifconfig

Note

The name of the interfaces may change depending on the OS version, i.e.: ens18

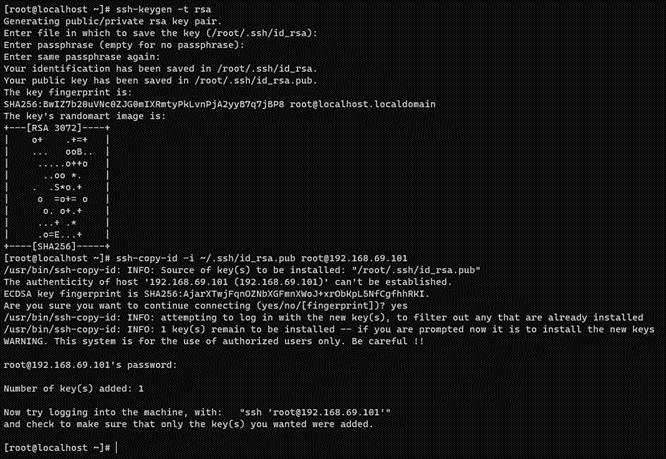

3.1.2.2. Creating and copying the ssh key pair

These steps are only necessary on the Core Principal node.

On the console type:

ssh-keygen -t rsa -C ansibleKey

Answer all questions with an “Enter” to use default values.

Now copy the generated public key to the nodes:

ssh-copy-id -i ~/.ssh/id_rsa.pub root@<nodes_IP>

Where <nodes_IP> are the IPs of all the available nodes nodes, ON Principal itself, ON Worker, ON Proxy, ON Analytics and/or ON Sensor.

Note

When copying the keys, it is important to do it against all the nodes, including the ON Principal itself from where it is executed.

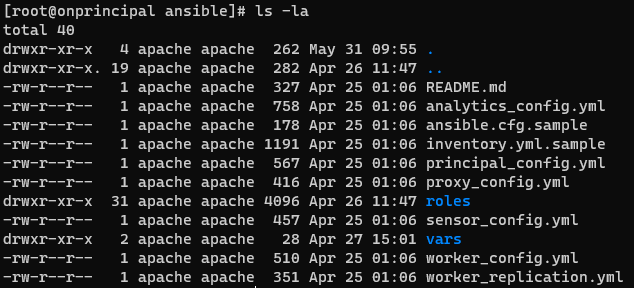

3.1.2.3. Locating the playbooks

As already mentioned, the playbooks and necessary code are in the Core Principal machine. So SSH into the Principal console and go to /usr/share/opennac/utils/ansible

cd /usr/share/opennac/utils/ansible

3.1.2.4. Repository configuration and corporate proxy

Before updating the nodes, note that:

The nodes have access to the internet, either directly or through a corporate proxy.

We must have the credentials to access to OpenNAC Enterprise repositories for node updates.

For this, we can use the configUpdates.sh script available on each node. Locate the configUpdates.sh script in the following paths:

ON Core

/usr/share/opennac/api/scripts/configUpdates.sh

ON Analytics

/usr/share/opennac/analytics/scripts

ON Aggregator

/usr/share/opennac/aggregator/scripts

ON Sensor

/usr/share/opennac/sensor/scripts

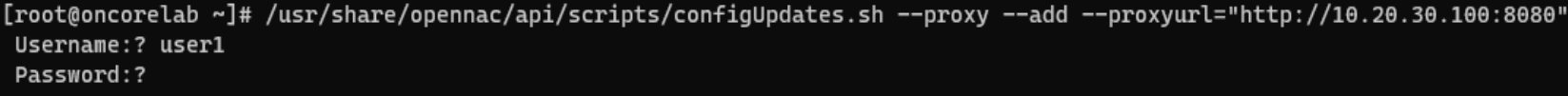

Corporate Proxy Authorization:

/usr/share/opennac/api/scripts/configUpdates.sh --proxy --add --proxyurl="http://<PROXY_IP>:<PROXY_PORT>"

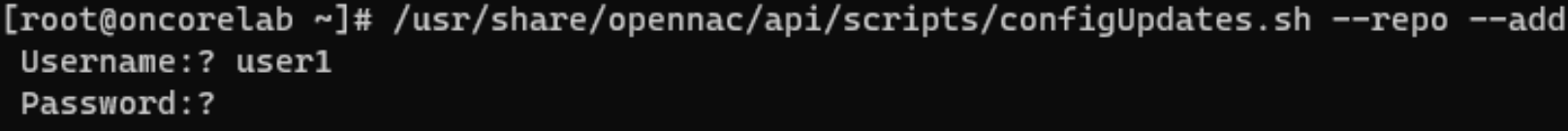

Repository credentials:

/usr/share/opennac/api/scripts/configUpdates.sh --repo --add

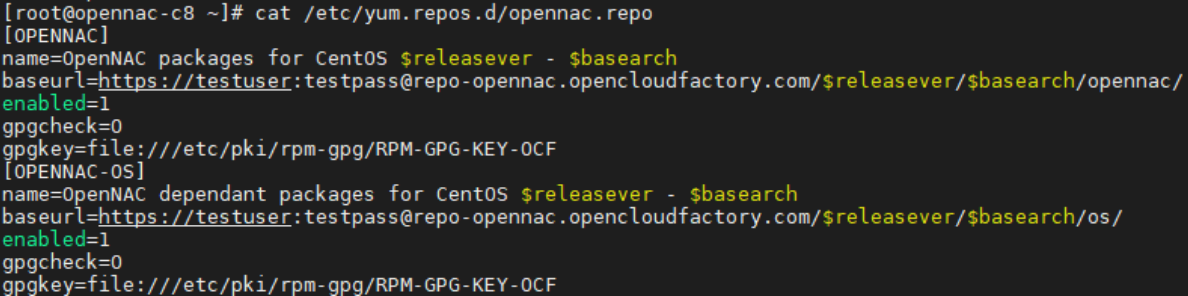

We can verify the correct configuration of the repository in the /etc/yum.repos.d/opennac.repo file:

3.1.2.5. Configuration steps

Note

It is mandatory to be on the most updated version of the OpenNAC Enterprise packages.

To update the Core Principal type the following command:

dnf clean all && dnf update -y && /usr/share/opennac/utils/scripts/restartOpenNACServices.sh && /usr/share/opennac/api/scripts/updatedb.php --assumeyes

On the Core Principal server go into /usr/share/opennac/utils/ansible folder. You will see many files, the important ones are:

principal_config.yml: Configuration of a Core Principal from OVA.

worker_config.yml: Configuration and replication of a Core Worker from OVA. This playbook calls to the replication one (worker_replication.yml)

proxy_config.yml: Configuration of a Core Proxy from OVA.

analytics_config.yml: Configuration of an Analytics from OVA.

sensor_config.yml: Configuration of a Sensor from OVA.

inventory.yml.sample: File with the IPs and hostnames of the nodes

ansible.cfg.sample: File with the basic Ansible configuration, here you will have to indicate the path to the ssh key generated previously.

vars/basic_vars.yml.sample: the file with the vars to fill in with your data.

Follow the steps carefully in the order listed.

3.1.2.5.1. Building the inventory

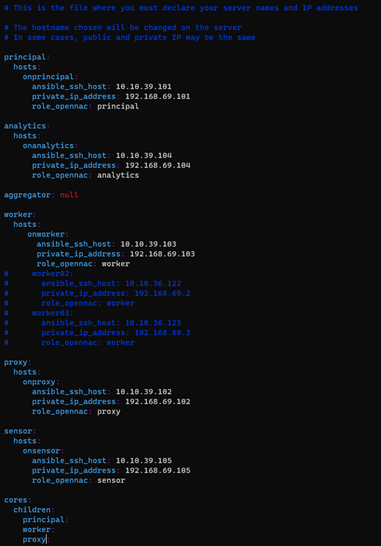

The IPs of the servers will be the ones needed to fill the inventory. First, copy the inventory.yml.sample to inventory.yml and then edit the file (for example with vim) to add the IPs as shown below. Always check the .sample file first for possible updates.

cp inventory.yml.sample inventory.yml

vim inventory.yml

The structure followed is:

group:

hosts:

<hostname>:

ansible_ssh_host: <PUBLIC_IP>

private_ip_address: <PRIVATE_IP>

role_opennac: <role>

where:

<hostname>: the name of the server, if it does not fit, the playbook will change it for the one you write on the inventory.

<PUBLIC_IP>: the accessible IP needed to make the SSH connection.

<PRIVATE_IP>: the internal IP needed in the servers to fill the /etc/hosts file or to communicate with each other. Sometimes you may not have this IP; in that case, fill it with the <PUBLIC_IP> as well.

<role>: the OpenNAC role needed to know, for example, what healthcheck configure (aggregator, analytics, principal, proxy, sensor, worker).

An example could be:

analytics:

hosts:

ana01-mycompany:

ansible_ssh_host: 10.250.80.1

private_ip_address: 192.168.69.5

role_opennac: analytics

Note

You can comment or uncomment servers according to your needs as shown with the workers for the HA deploys. Do NOT comment the group.

Warning

This is an initial and one-time deployment. Currently, the deployment of new nodes in the same infrastructure after the initial deployment is done is not supported. Please be careful using these playbooks after the initial deployment.

3.1.2.5.2. Configuring ansible

The ansible.cfg configuration is similar. Copy the ansible.cfg.sample onto ansible.cfg and edit it to fill the path to your private key (the one generated on the prerequisites). Also indicate the path to your inventory file (inventory.yml) There are more variables in this file you may want to change, but these are the recommended and basic ones. Always check first the .sample file for possible updates.

cp ansible.cfg.sample ansible.cfg

vim ansible.cfg

The content in the file is the following:

[defaults]

timeout=30

inventory=inventory.yml

host_key_checking=False

remote_user = root

private_key_file=~/.ssh/id_rsa

[ssh_connection]

control_path = %(directory)s/%%h-%%r

3.1.2.5.3. Filling in the vars

There is a basic_vars.yml.sample file inside /usr/share/opennac/utils/ansible/vars in which you will find the variables. It is very important to look at all the variables and understand their usage (explained below). You only have to complete the variables that are suitable for your architecture, i.e., if your deployment does not have a sensor, leave the sensor variables with the default value.

Copy the basic_vars.yml.sample onto basic_vars.yml and edit it to fill in the variables (most of them can stay with the default value), paying special attention to the repo user and password and the interfaces of the sensor. A variable misconfiguration can lead to an execution error. Always check the .sample file first for possible updates.

cp vars/basic_vars.yml.sample vars/basic_vars.yml

vim vars/basic_vars.yml

Note

DO NOT comment or delete the variables (unless it is specifically said that you can delete lines, never the variable name). If you are not going to use a variable, leave it with the default variable.

Common variables: These variables are mandatory for every deployment.

timezone_custom: The timezone where the server is set (you can execute command timedatectl list-timezones to list valid timezones)

ntpserv: NTP servers where you must get the synchronization

deploy_testing_version: Set to “true” if you want to use the testing version, “false” for the stable version (default is “false” as it is the stable version)

repo_auth: The user/password to access the OpenNAC Enterprise repository

Principal configuration.

criticalAlertEmail: Email or set of emails where you want to receive alerts

criticalAlertMailTitle: Title of the alert email

criticalAlertMailContent: Content of the critical email

clients_data: To configure /etc/raddb/clients.conf, add as many clients as you need

ip: ‘X.X.X.X/X’

shortname: Desired client name

secret: Desired password

relayhostName: FQDN of the SMTP server to relay the mails (next-hop destination(s) for non-local mail). Configure /etc/postfix/main.cf and /etc/postfix/generic

relayhostPort: Port of the “relayhostName” to relay the mails. Configure /etc/postfix/main.cf and /etc/postfix/generic

mydomain: The mydomain parameter specifies the local internet domain name. Configure /etc/postfix/main.cf and /etc/postfix/generic

emailAddr: The email used as the sender who sends the alerts. Configure /etc/postfix/main.cf and /etc/postfix/generic

Analytics and Aggregator.

netflow: in case you want to activate netflow enable “true”, RAM Memory has to be at least 64GB (default is “false”)

Sensor.

LOCAL_MGMT_IFACE: from where we are going to access the device for management (important to select the assigned interface for management, otherwise installation will fail)

SNIFFER_INTERFACE: the interface that capture packets (important to select the assigned span interface, otherwise installation will fail)

deployment_mode: There are two capture methods: SPAN mode and SSH mode. Change the following variable to ‘SSH’ or ‘SPAN’ (default is “SPAN”)

remoteHostIP: the remote IP of the device that needs to be captured (if you have selected deployment_mode: ‘SSH’)

remoteInterface: The remote interface where the information is going to be collected (if you have selected deployment_mode: ‘SSH’)

remoteHostPassword: opennac (if you have selected deployment_mode: ‘SSH’)

Worker replication

mysql_root_password: password for mysql root user

mysql_replication_password_nagios: password for mysql nagios user

path: the path to save the dump .sql file

Proxy

sharedkey: the string to encrypt the packets between the Proxy Servers and Backends

pools_data: to configure /etc/raddb/proxy.conf, add or delete as many as you need

namepool: the name of the pool

namerealm: the name of the realm

clients_data_PROXY: to configure /etc/raddb/clients.conf, add or delete as many clients as you need

ip: ‘X.X.X.X/X’

shortname: desired client name

secret: the previously defined shared key

3.1.2.6. Launching the playbooks

Now we can launch the playbooks in the order listed. To do so, on the Core Principal server, go to the /usr/share/opennac/utils/ansible folder.

You only have to launch the playbooks that are suitable for your architecture, i.e., if you do not have a sensor, do not launch the sensor_config.yml playbook.

Depending on the role you should launch one playbook or another: <role_name>_config.yml. The first one must always be the Principal itself, and you should let the playbooks finish before launching the next one.

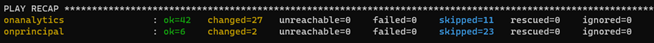

Note

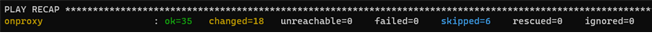

The numbers of each “ok”, “changed”, “skipped” or “ignored” may vary depending on the playbook, number of nodes, etc. The only ones that must be always 0 are “unreachable” and “failed”. Otherwise, something went wrong and you should review the tasks.

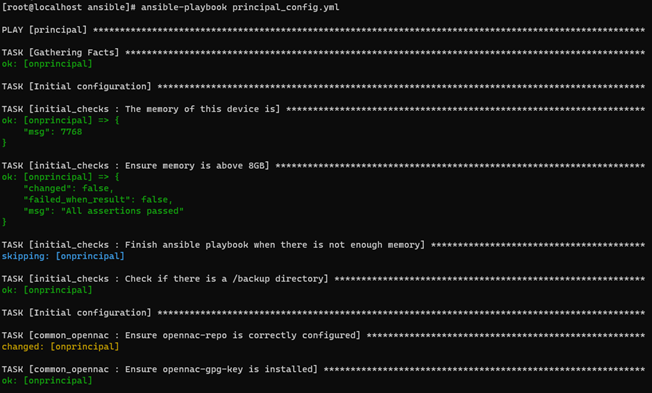

3.1.2.6.1. Principal Configuration

First, we will configure the Principal itself. Launch the following command:

ansible-playbook principal_config.yml

You may see some warnings; they are not critical. There can also be “failed” tasks but ignored (”…ignoring” or the playbook does not stop) you should pass over the ignored failed tasks. Once the installation process finishes, the result should look as follows:

When the execution finishes, you can launch the next playbooks, letting them finish

3.1.2.6.2. Analytics Configuration

To configure the Analytics, launch the following command (from the Principal):

ansible-playbook analytics_config.yml

Once the configuration process finishes on Analytics, the result should look as follows:

3.1.2.6.3. Sensor Configuration

To configure the Sensor, launch the following command (from the Principal):

ansible-playbook sensor_config.yml

Once the configuration process finishes on Sensor, the result should look as follows:

3.1.2.6.4. Worker Configuration (and Replication)

To configure the Worker, launch the following command (from the Principal):

ansible-playbook worker_config.yml

Once the configuration process finishes on Worker, the result should look as follows:

3.1.2.6.5. Proxy Configuration

To configure the Proxy, launch the following command (from the Principal):

ansible-playbook proxy_config.yml

Once the configuration process finishes on Proxy, the result should look as follows: