openNAC Slave Node Deployment¶

The following steps explain the process that should be followed in order to have a openNAC Core Cluster up and running.

- First at all you have to create an openNAC Core instance that will work as a Core Master.

- After that the Master is up and running a openNAC Core Slave instance should be deployed.

- Trusted connection should be establish between both nodes.

Once you have Master and Slave is required to establish a Load Balance policy.

This kind of deployment could be used and followed with Proxy Radius Architecture or without it.

In order to add and deploy a new node, Please follow the next Steps:

MySQL replication¶

On all Servers¶

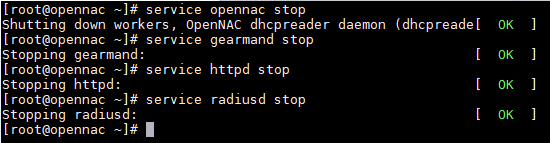

Stop services

service opennac stop

service gearmand stop

service httpd stop

service radiusd stop

On Master Configuration¶

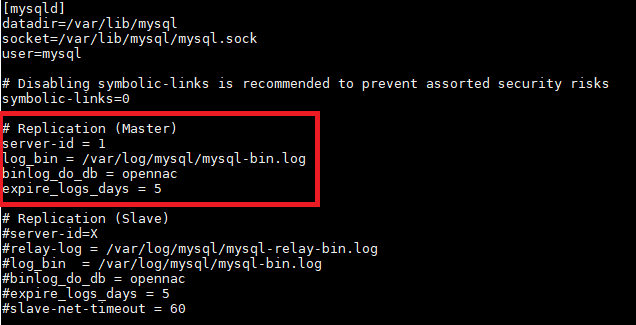

- edit the /etc/my.cnf and uncomment the “Replication (Master)” session.

vim /etc/my.cnf

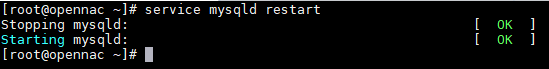

- Restart mysql.

service mysqld restart

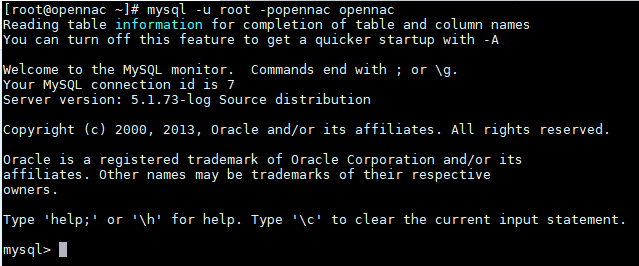

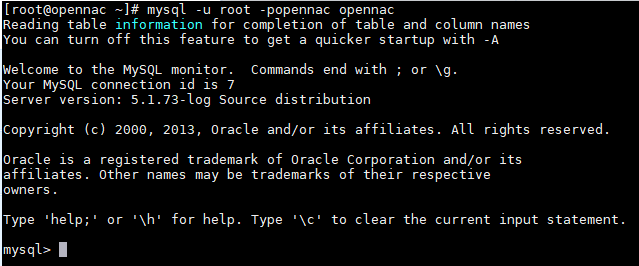

- Access mysql.

mysql -u root -p<mysql_root_password> opennac

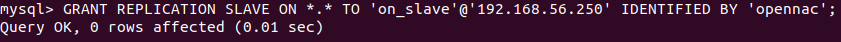

- Grant permissions

GRANT REPLICATION SLAVE ON *.* TO 'on_slave'@'<slave_ip>' IDENTIFIED BY '<password>';

Note

- Run for each slave with its own IP address

- Remember that it is important that this password is unique and that it should be stored in somewhere safe, like a password vault.

- This password will be used to configure all slaves.

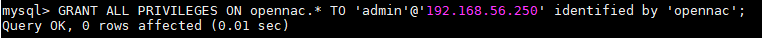

- Grant privileges

GRANT ALL PRIVILEGES ON opennac.* TO 'admin'@'<slave_ip>' identified by '<admin_password>';

Note

- Run for each slave with its own IP address

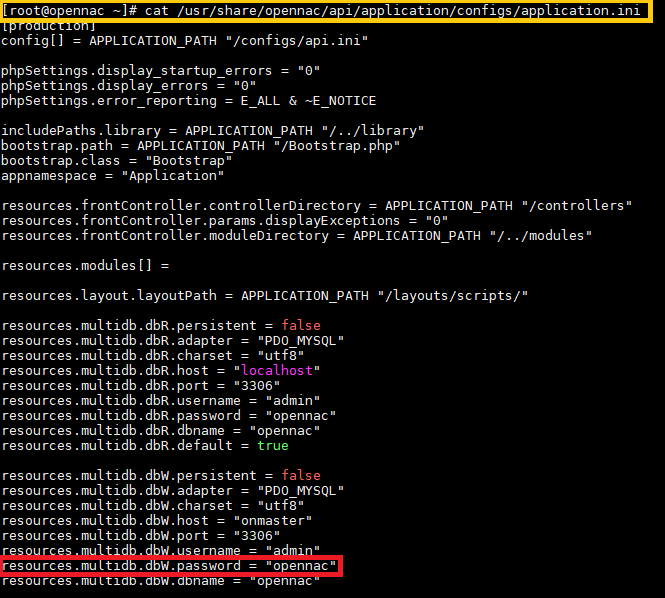

- The admin_password will be the same that is stored in the file ‘/usr/share/opennac/api/application/configs/application.ini’, from each slave, is the value of the field ‘resources.multidb.dbW.password’.

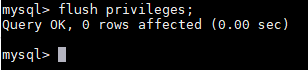

- Flush privileges

flush privileges;

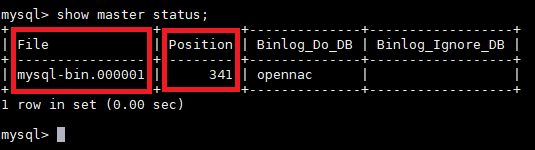

- Still inside mysql, check the master status, mind the file and position for later use. After that exit from Mysql.

show master status;

exit

Note

Remember the file and position values will be used in the Slave configuration.

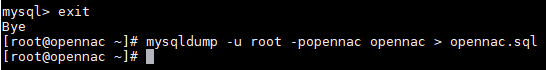

- Generate a dump of openNAC database.

mysqldump -u root -p<mysql_root_password> opennac > opennac.sql

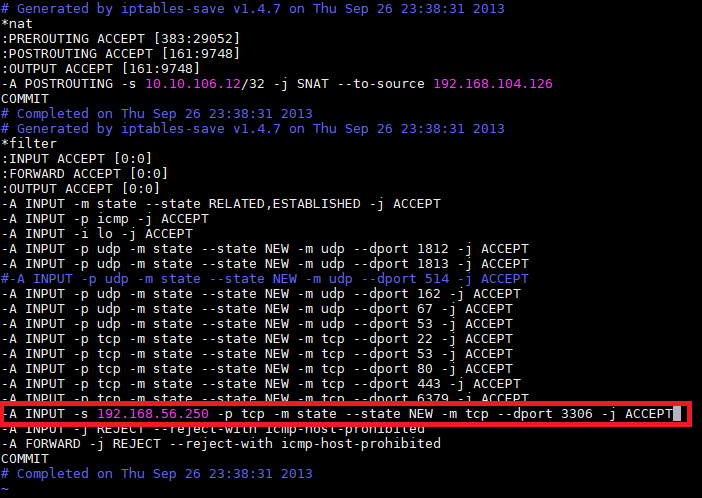

- Insert the firewall rule into master’s iptables.

vim /etc/sysconfig/iptables

Add the following line (where the slave_ip is the ip of the core that contains the replicated database).

-A INPUT -s <slave_ip> -p tcp -m state --state NEW -m tcp --dport 3306 -j ACCEPT

Note

You need to configure a rule for each slave device with its own IP address.

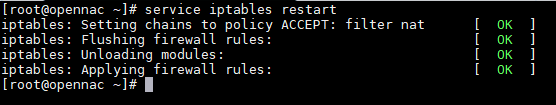

- Restart iptables service

service iptables restart

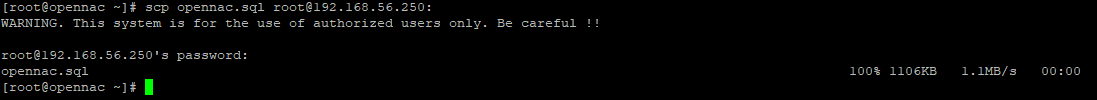

- Now, send this dump to all the slaves (where the slave_ip is the ip of the core that contains the replicated database).

scp opennac.sql root@<slave_ip>:

On Slave Configuration¶

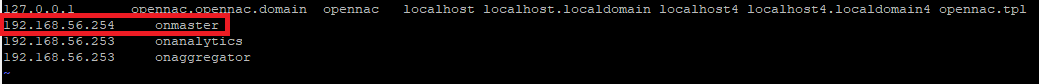

- Edit hosts file.

vim /etc/hosts

Change the on master IP to the mysql master_ip.

<master_ip> onmaster

- Import the opennac.sql database file.

mysql -u root -p<mysql_root_password> opennac < opennac.sql

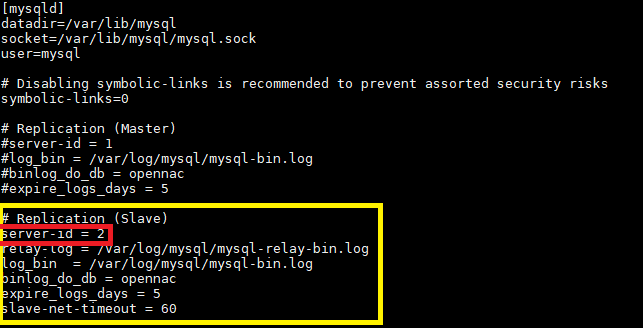

- Edit the /etc/my.cnf and uncomment the “Replication (Slave)” session.

vim /etc/my.cnf

Change the server-id to unique number (if you have 3 slaves, you will have the server-id=1 inside the master, the first slave will be 2, the second 3 and the third 4 and so on…)

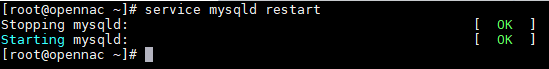

- Restart mysql service (service mysql restart).

service mysqld restart

- Access the mysql cli.

mysql -u root -p<mysql_root_password> opennac

- Configure the replication.

Note

- Here you will use the password created on master’s step: 4.Grant permissions

Note

- The file and position must be the data that was gotten from master’s step 7.

CHANGE MASTER TO MASTER_HOST='onmaster',MASTER_USER='on_slave',MASTER_PASSWORD='<password>',MASTER_LOG_FILE='<file>',MASTER_LOG_POS=<position>;

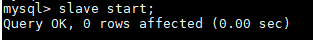

- Start the slave.

slave start;

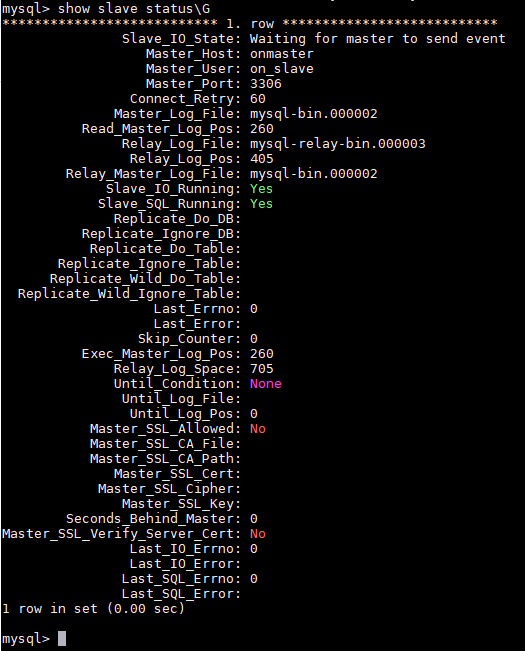

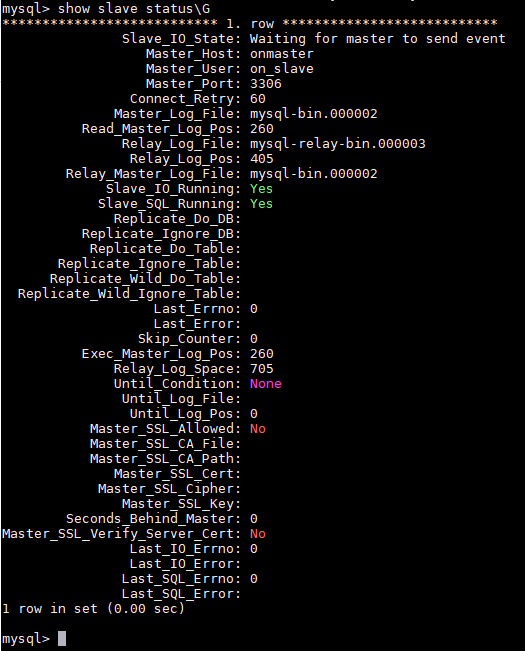

- See if you don’t have errors.

show slave status\G

On all Servers¶

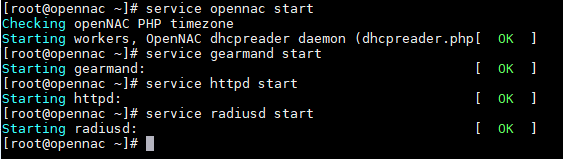

Start services on all servers

service opennac start

service gearmand start

service httpd start

service radiusd start

Verify Slave Status¶

- Check if everything is still fine and you don’t have errors.

show slave status\G

Slave On Master¶

When deploying an openNAC farm with master-slave replication, some scenarios can require a Slave On Master which will assume the role of master server in case of unavailability of the primary On Master server.

How it works:

- This server is configured as replication master, with a few differences;

- It also is configured as a replication slave, like other normal slave servers;

- The slave updates received from the main master will be replicated to slaves configured to replicate from the Slave On Master;

- In the case of the primary On Master becomes offline, the slave servers can be reconfigured to replicate from the Slave On Master;

- A greater fault tolerance, faster failover and better load distribution between masters can be achieved when half of the farm’s slaves replicates from the primary On Master and the other half replicates from the Slave On Master;

- Since this setup configuration is not an automatic clustering solution, the failover/switchover of the farm replication requires manual intervention.

Slave On Master Configuration¶

Perform the following configuration steps described above on the section “On Slave Configuration”:

Step 1 - Edit hosts file.

Step 2 - Import the opennac.sql database file - This database file is the dump from the primary On Master Server.

Step 3 - Edit the /etc/my.cnf and uncomment the “Replication (Slave)” section. In addition to the existing commented lines, insert in this section the configuration entry “log-slave-updates = true”. The final configuration of the section must be:

#Replication (Slave) relay-log = /usr/share/opennac/mysql/mysql-relay-bin.log log_bin = /usr/share/opennac/mysql/mysql-bin.log binlog_do_db = opennac expire_logs_days = 5 slave-net-timeout = 60 log-slave-updates = true

Step 4 - Restart mysql service (service mysql restart).

Step 5 - Access the mysql cli.

Step 6 - Configure the replication.

Step 7 - Start the slave.

Step 8 - See if you don’t have errors.

Note

The line “log-slave-updates = true” will cause this server, since it is also an slave, write updates that are received from the primary master and performed by the slave’s SQL thread to this slave’s own binary log, which in turn will be replicated to other slave servers when the database is acting as master.

- Perform the following configuration steps described above on the section “On Master Configuration”:

- Step 1 - Edit the /etc/my.cnf and uncomment the “Replication (Master)” section. Remember to assign the proper server-id.

- Step 2 - Restart mysql.

- Step 3 - Access mysql.

- Step 4 - Grant permissions.

- Step 5 - Grant privileges.

- Step 6 - Flush privileges.

- Step 7 - Still inside mysql, check the master status, mind the file and position for later use. After that exit from Mysql.

- Step 9 - Insert the firewall rule into Slave master’s iptables.

- Step 10 - Restart iptables service.

- The steps 8 and 11 must be performed only if you already have other Slave servers to replicate from this Slave On Master or if you are performing a manual switchover of slaves, in case if the primary On Master become unavailable.