2.3.4.1. ON Sensor basic configuration

In this section, we can find the basic configuration for the ON Sensor component.

The default SSH access for all images username/password is root/opennac.

Warning

Change the default password to a stronger password.

2.3.4.1.1. Network configuration

ON Sensor OVAs come configured with two network interfaces (eth0 and eth1).

We can use eth0 as a management and communication interface between nodes, for this we will configure an IP in the range of the others, for example 192.168.56.252/24.

The eth1 interface will be used for the Port Span where the sensor will listen for network traffic with the possibility of obtaining traffic through SPAN, RSPAN or ERSPAN and in this way, to obtain remote traffic in some cases. This interface does not need to have any additional configuration.

To assign said IP, we execute the graphical network manager:

nmtui

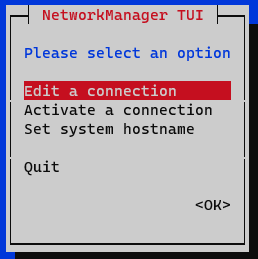

In the initial window we select Edit a connection.

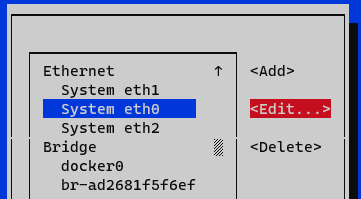

Select the interface and press Edit.

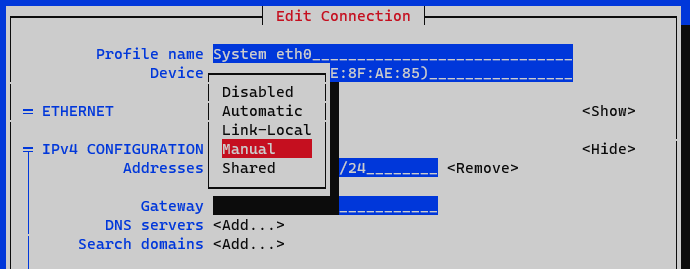

In the IPv4 Configuration section we select Manual.

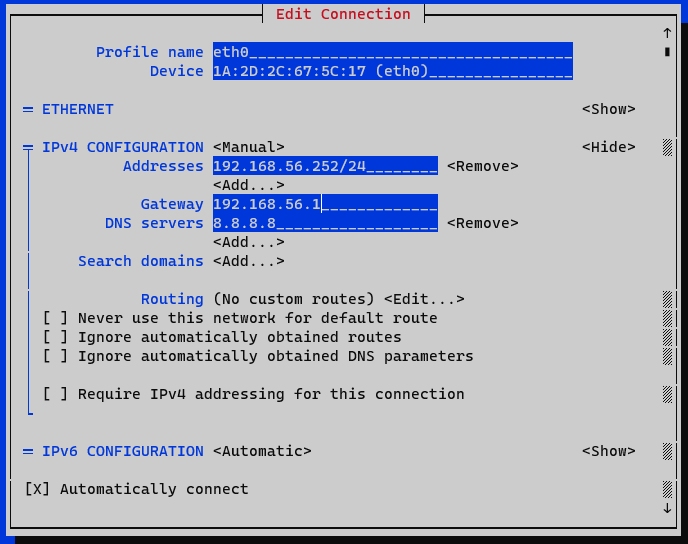

We display the IPv4 configuration by selecting the <Show> option

Addresses: We add the IP of the node with the corresponding network mask (<IP>/<MASK>).

Gateway: We add the gateway of the node.

DNS Servers: We add a DNS server (for example, Google).

We mark the option Require IPv4 addressing for this connection.

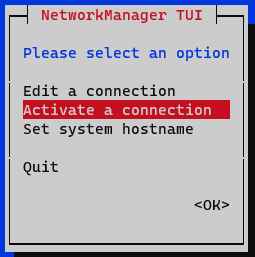

We end the configuration by clicking on <OK> at the bottom. At this moment we must activate and deactivate the interface to apply the changes to the interface that we have edited. In the menu we select the option Activate a connection.

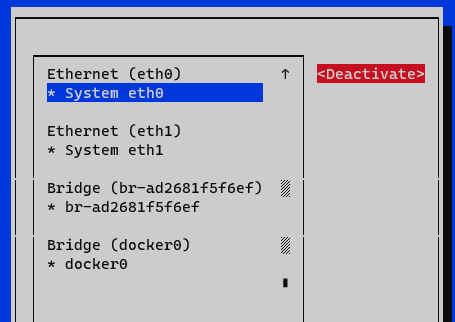

We deactivate and activate the interface and return to the initial menu.

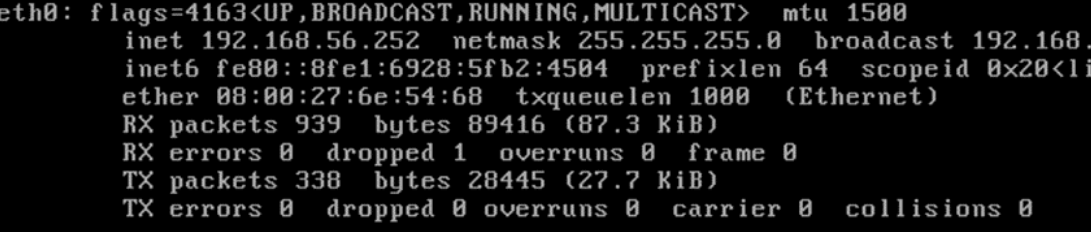

Now we have the node configured, we can verify it with the command ifconfig or ip a:

We must modify the /etc/hosts file and include the appropriate address of ON Sensor (127.0.0.1 ) identified as onsensor in case of forming part of the module architecture. An example is shown below:

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

127.0.0.1 onsensor

192.168.56.254 onprincipal

192.168.56.253 onanalytics

192.168.56.253 onaggregator

At this point, we can access the ON Sensor server through the SSH connection using the default credentials:

User:root

Password: opennac

Remember that this is a default password and should be changed as soon as possible.

2.3.4.1.2. Change the hostname

To modify the hostname of the node we must use the following command:

hostnamectl set-hostname <hostname>

We recommend you to use the same name defined in the previous section (/etc/hosts), in this case, onanalytics.

Once the hostname has been modified, it will be necessary to restart to apply the changes.

reboot

To verify the hostname and obtain information about the equipment we can use the hostnamectl command.

2.3.4.1.3. Iptables configuration

It is necessary the configuration of the iptables.

First of all, we need to copy the sensor iptables template to the iptables file:

yes | cp /usr/share/opennac/sensor/iptables_sensor /etc/sysconfig/iptables

To have the sensor iptables correctly we need to substitute the node variables in of the following files:

vi /etc/sysconfig/iptables

# sample configuration for iptables service

# you can edit this manually or use system-config-firewall

# please do not ask us to add additional ports/services to this default configuration

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

-A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

-A INPUT -p icmp -j ACCEPT

-A INPUT -i lo -j ACCEPT

# SNMP rule would be enabled only from openNAC Core IPs

#-A INPUT -s <oncoreXX_ip> -p udp -m state --state NEW -m udp --dport 161 -j ACCEPT

# SSH port

-A INPUT -p tcp -m state --state NEW -m tcp --dport 22 -j ACCEPT

# DHCP-HELPER-READER

#-A INPUT -s <core_ip> -p udp -m state --state NEW -m udp --dport 67 -j ACCEPT

-A INPUT -j REJECT --reject-with icmp-host-prohibited

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

COMMIT

Finally, restart the iptables service:

systemctl restart iptables

2.3.4.1.4. NTP Configuration

First, we must stop the NTP server before modifying its parameters, we must enter a valid NTP server, for example hora.roa.es:

systemctl stop chronyd

If you have your own NTP server, it can be configured.

The /etc/chrony.conf file is modified and the appropriate servers are included:

server <ip_server_ntp>

We can also add an NTP pool with the line:

pool <IP_pool_ntp>

The file is saved and the service is started:

systemctl start chronyd

2.3.4.1.5. Repository configuration and corporate proxy

Before updating the nodes it is necessary that:

The nodes have access to the internet, either directly or through a corporate proxy.

We must have the credentials to access to OpenNAC repositories for node updates.

For this we can use the configUpdates.sh script available on each node.

In ON Sensor we will find the script in:

/usr/share/opennac/sensor/scripts/configUpdates.sh

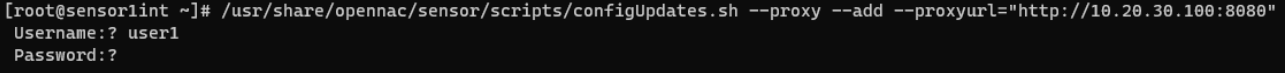

Corporate Proxy Authorization:

/usr/share/opennac/sensor/scripts/configUpdates.sh --proxy --add --proxyurl="http://<PROXY_IP>:<PROXY_PORT>"

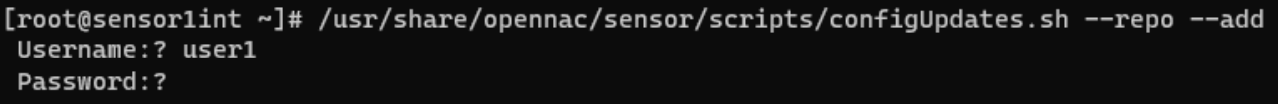

Repository credentials:

/usr/share/opennac/sensor/scripts/configUpdates.sh --repo --add

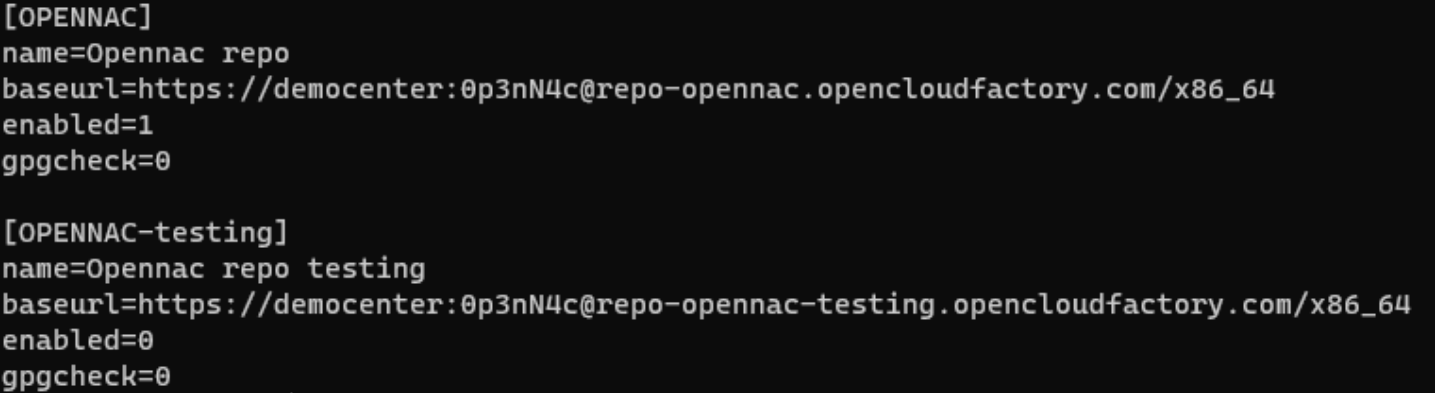

We can verify the correct configuration of the repository in the /etc/yum.repos.d/opennac.repo file:

2.3.4.1.6. Update to latest version

One of the recommended steps to take when the system is newly deployed is to update it to the latest version available.

To update the ON Sensor machine, go to the ON Sensor Update Section. Once the update is finished, we must continue at this same point.

2.3.4.1.7. Bro setup (Zeek)

At this point, we must configure the zeekctl.cfg file of the Zeek or Bro service to filter the system’s own traffic and avoid storing it in the Bro indexes.

We go to the /opt/zeek/etc/zeekctl.cfg file and add the filters we want, in this case the one for ON Core machines of the system:

zeekargs = -f 'not host <ONCore_IP>'

Listening interface configuration

In some newer installations, the BRO service may have changed its name to Zeek, so some directories must be accessed with the name zeek and not bro.

We check if the Zeek service is configured correctly:

vim /opt/zeek/etc/node.cfg

The name of the interface where the service listens should appear in the “interface” field:

[zeek]

type=standalone

host=localhost

interface=<interface_name>

If we need to configure several listening interfaces, we need to configure it in workers:

[logger]

type=logger

host=localhost

[manager]

type=manager

host=localhost

[proxy-1]

type=proxy

host=localhost

[worker-1]

type=worker

host=localhost

interface=interface1_name

[worker-2]

type=worker

host=localhost

interface=interface2_name

We restart the service to apply the changes:

systemctl restart zeek

2.3.4.1.8. PF_RING Configuration

This service is configured automatically. In this example, eth0 is used as the management interface, and eth1 for capture or traffic sniffing where the pf_ring service will sniff port span traffic. System administrators may need to change the SPAN interface. To make this change, we go to /etc/pf_ring/ and modify the interfaces.conf file according to the configuration of the interfaces. The value of CAPTURE_INTERFACES will be the interface where pf_ring will sniff traffic, this must match the interface where we have configured the Port Span.

#MANAGEMENT_INTERFACES="eth0"

CAPTURE_INTERFACES="eth1"

Once pf_ring is configured, we must restart said service, as well as zeek:

systemctl restart pf_ring

systemctl restart zeek

2.3.4.1.9. DHCP-Helper-Reader Configuration

To enable the service to read DHCP packets and validate relevant information for the Core, it is essential to specify the listening interface.

To do so, modify the /etc/sysconfig/dhcp-helper-reader file and define the interface in the following line:

OPTIONS="-r <interface> -s onmain -q onmain"

Once configured, restart the service:

systemctl restart dhcp-helper-reader

2.3.4.1.10. Healthcheck configuration

Healthcheck is the system monitoring module. It has service controls that ensure that all necessary system services are running properly.

On a fresh installation of ON Sensor, the first step is to check if the package is installed:

rpm -qa | grep opennac-healthcheck

If the healthcheck package is not installed, we need to install it:

dnf install opennac-healthcheck -y

After installing it, we need to configure it:

cp -rfv healthcheck.ini.sensor healthcheck.ini

cp -rfv application.ini.sample application.i

2.3.4.1.11. Unnecessary Services

Since the ON Sensor server uses the ON Analytics OVA, it is necessary to disable and uninstall the ON Analytics services to improve system performance.

Disable services:

systemctl stop kibana

systemctl stop logstash

systemctl stop elasticsearch

systemctl stop redis

systemctl disable kibana

systemctl disable logstash

systemctl disable elasticsearch

systemctl disable redis

Delete services:

dnf remove opennac-analytics elasticsearch logstash kibana

Redis can’t be uninstalled because we need it for TIME_SYNC healthcheck.

2.3.4.1.12. Collectd configuration

Collectd is used to send the trending information to the ON Principal. To configure Collectd it is necessary to edit the following file:

vim /etc/collectd.d/network.conf

Inside the file we should have something like:

# openNAC collectd file

#

# This file *WON'T* be automatically upgraded with default configuration,

# due to is only configured in deployment stage

LoadPlugin "network"

## When this server is a Core Principal or

## collect all data from other nodes

## Default port: 25826

#<Plugin "network">

# <Listen "{{ principal_IP }}">

# SecurityLevel "Encrypt"

# AuthFile "/etc/collectd.d/auth_file"

# </Listen>

#</Plugin>

## When this server send info to another

## server (ex. a Core Principal or similar)

## Default port: 25826

<Plugin "network">

<Server "onprincipal">

SecurityLevel "Encrypt"

Username "opennac"

Password "changeMeAsSoonAsPossible"

</Server>

</Plugin>

To send the collectd messages with this device, we need to go to:

vi /etc/collectd.conf

We need to add the server’s hostname in the hostname parameter.

#Hostname "localhost"

Hostname "onsensor-XX"

Finally, it is necessary to restart the Collectd service.

systemctl restart collectd