2.3.7.2. ON Captive with multiple Workers

This configuration aims to provide High Availability and load balancing between Captive Portal instances running on multiple servers by providing the persistence mechanisms necessary to ensure the connection of a client will be properly forwarded to the correct backend, preventing requests processing errors.

For this scenery, the following premises should be observed:

A valid DNS record resolves the FQDN of the captive URL to the IP address of the server running the HAProxy service (normally a proxy radius server).

If the HAProxy redundancy is desirable, a simple DNS name resolution balancing between two or more servers running the HAProxy is not suitable because just use DNS balancing does not ensure connection persistence. Thus, consider the possibility of use a load balancer in front of the HAProxy servers. If it is not possible, the DNS record resolving the FQDN of the captive portal URL must point to only one HAProxy server (and possibly manually changed to point to other HAProxy server in case of unavailability).

It is assumed that the Captive Instance is configured to be “Installed in core” and it is replicated form the principal Core Server to the Core Workers servers.

2.3.7.2.1. Captive Instance Configuration

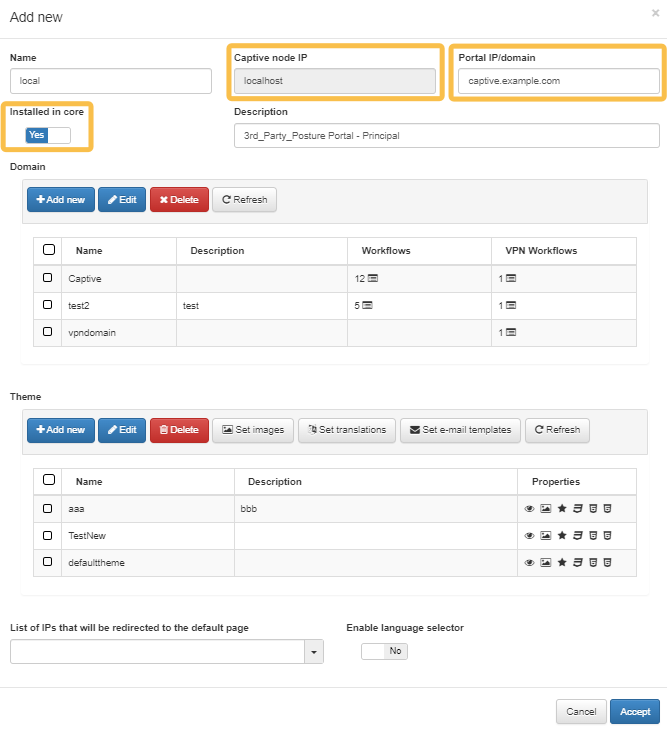

To balance the load among multiple workers, ensure that you properly configure the following fields in the Captive instances section:

Captive node IP: The value of this field must be localhost.

Portal IP/Domain - This field must be filled with the FQDN of the portal URL (which will later be added to the

/etc/hostsfile of the Core servers), for example, captive.example.com.Installed in core - The value of this flag must be set to “Yes”.

2.3.7.2.2. HAProxy Configuration

The haproxy service must be configured on the proxy radius servers (or other server assigned to run the haproxy service) to support source balancing and cookie persistence, additionally a new ACL corresponding to the particular captive workflow also must be added. Edit the file /etc/haproxy/haproxy.cfg and follow the steps below:

On all servers running the haproxy service add the a new ACL to the fronted main section, below the comment #ACL List. In the example below, the acl captiveACL was added for the captive workflow profile-guest-users which the corresponding path is

/profile-guest-users.

#ACL List

acl indexACL path -i / /index /index/init /index/check-session

acl captiveACL path_beg -i /profile-guest-users

acl themeACL path_beg -i /theme /fonts /css /js /img /bootstrap /pnotify /fileupload

acl agentACL path_beg -i /agent/download /opennac-agent /status /agent-update-file /agent/companies

acl samlACL path_beg -i /vpn /simplesaml

acl otpACL path_beg -i /otp

acl adminACL path_beg -i /admin

acl testbwACL path_beg -i /testbw

It is important take in account that each captive workflow has its own path. Below there is a list of the existing captive workflows and their corresponding paths that can be used in the captiveACL configuration. Configure the correct path corresponding to the captive workflow that is actually in use.

Captive Workflow |

Path |

|---|---|

profile-guest-users |

/profile-guest-users |

webauth-registered-users |

/webauth-registered-users |

webauth-guest-users |

/webauth-guest-users |

dot1x-registered-users |

/dot1x-registered-users |

dot1x-guest-users |

/dot1x-guest-users |

If the instance has more than one captive workflowflow configured, then it will be necessary to add an ACL line for each workflow (defining a different name for each ACL) assigning the corresponding path to the workflow as per the table above.

Activate the captiveACL adding it to the proper line, below the comment #Active ACLs:

#Active ACLs

use_backend app if indexACL or agentACL or themeACL or samlACL or otpACL or captiveACL

If multiple acls corresponding to different workflows were declared, they must all be added to the configuration line as per the example above.

Change the configuration of the backend sections to work with source balancing and cookie persistence. The example below configures the backend sections to balance requests between the Core servers onworker01 and onworker02. Any combination of servers principal or workers is valid on condition that the captive portal instance is replicated on all of the servers declared in the configuration.

backend app

balance source

http-request set-header X-Client-IP %[src]

option forwardfor

cookie SERVER_USED insert indirect nocache

server onworker01 onworker01:443 check cookie app1 ssl verify none

server onworker02 onworker02:443 check cookie app2 ssl verify none

backend default

balance source

http-request set-header X-Client-IP %[src]

option forwardfor

cookie SERVER_USED insert indirect nocache

http-request set-path /

server onworker01 onworker01:443 check cookie app1 ssl verify none

server onworker02 onworker02:443 check cookie app2 ssl verify none

Restart the haproxy service to activate the changes:

systemctl restart haproxy

2.3.7.2.3. Hosts File Configuration

On each ON Core server declared as a backend server in the haproxy configuration, edit the /etc/hosts file and add the URL contained on the field Portal IP/domain of the captive instance configuration to the line corresponding to the Core ip address on the LAN.

In the line of example below of the onworker01 server, the Portal IP/Domain is captive.example.com and the its IP is 192.168.150.10:

192.168.150.10 captive.example.com onworker01.example.local onworker01 oncore